We'll take you down network management memory lane to demonstrate how far we've come from the days of nascent networks - and where we are heading.

Back in the late 1980s and early 1990s network management was so young that we were not sure what the predominant network technology would be. It was primarily a battle between Ethernet and Token Ring, with the latter being supported heavily by IBM. ARCnet (Attached Resource Computer NETwork) was also in the mix, but it couldn't match up to the other two.

Related Article: The Evolution Of Technology: From Unusual To Ubiquitous

Ethernet had beat Token Ring by the mid-90s because it was the best match to connect a number of systems with protocols to control the passing along of information. It beat out Token Ring on speed and performance, and vendors started to rally around it in earnest.

The Rise and Fall of Novell

Novell NetWare (1996)

Novell NetWare (1996)Back in the 1990s everything ran on Novell's NetWare, a hugely popular network operating system that first came to market in 1983. I was a Certified NetWare Engineer (CNE) at the time. In the early 1990s, NetWare simply ran a lot faster than its primary competitor – Windows NT.

However, Microsoft was determined to win and Novell had started to suffer from a bit of corporate arrogance. It's hard to beat Microsoft in the marketplace no matter how great your product is. Microsoft simply outmaneuvered Novell. Microsoft won the battle by 2000. If they want to put you out of business, it's hard to win.

Finding Network Problems and Consolidating Point Products

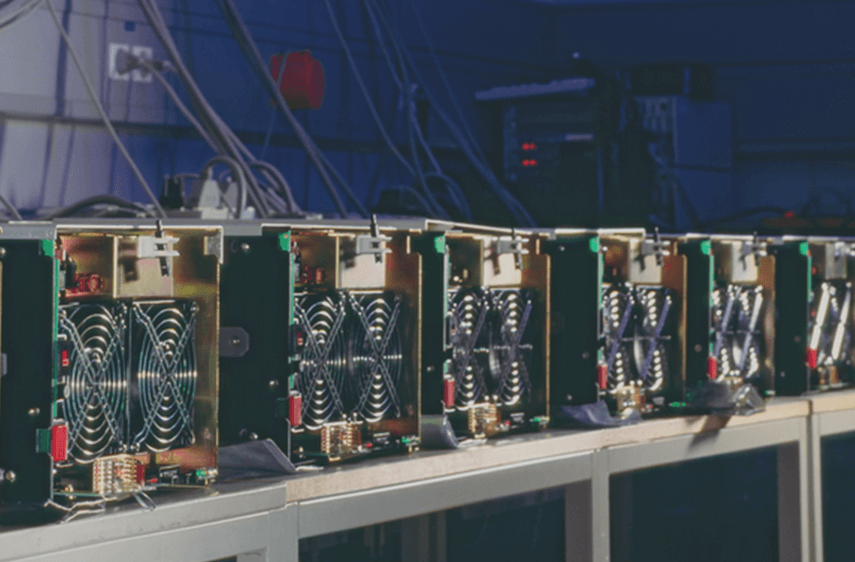

Thomas Conrad, Frye Computer Systems (later acquired by Seagate) and others developed network management software that basically grabbed statistics off the NIC (network interface controller). By the late 1990s some people were trying to consolidate point products. CA was famous for years for buying up products but never really integrating.

Related Article: The Real Cost of a Network Monitor

We knew what comprised a solid network topology and began to seek more in-depth network monitoring capabilities. Yet there was no one single way to monitor all the different areas of the network. You might be able to manage something with SNMP or CMI (common management infrastructure) which was basically a way to instrument your applications and grab data.

Back then I was a product evangelist for Tivoli's Global Enterprise Manager. The original Tivoli product was basically a bunch of PERL scripts. My product was supposed to be a single pane of glass where all your alerts would come into a single dashboard. Except it pushed all alerts into it and that wasn't what customers wanted to do. Around this time BMC and CA basically came into their own. People wanted a one stop shop. They wanted a company to be able to look at everything and create meaning out of it.

The problem was that it cost a lot of money to use those platform vendors. And people came to realize that installing their products required professional services engagements. And there was an awful lot of manual labor and customer programming done to products like Tivoli to get them to work in complex environments.

Getting Down to the Root Cause Analysis

By the early 2000s people really wanted the elusive single pane of glass instead of several different utilities that each monitored a different aspect of the network. CA was acquiring products that were complimentary to theirs but they never truly integrated them into their Unicenter platform. They simply had a single screen that would launch all of these different point products behind the scenes.

Here is where it became important that network management technologies were designed to work together from the ground up. This is when SMARTS (later acquired by EMC) came out and when folks began to think about root cause. Root cause analysis wasn't just about knowing that something was wrong but getting the meaning behind the alert or alarm.

Related Article: Hitting the High Notes: Network Troubleshooting Time Cut by 80%

To some extent that emphasis and desire for root cause analysis is still with us today. The reason we still talk about it is because it is so hard. We talk about the relationships between components on a network, in an application, and pretty much anything within an IT infrastructure. It is difficult to automatically discover these relationships, but that's what everybody wanted. Network professionals didn't just want to understand what was wrong, but also why it was wrong. And once they had root cause analysis, they would know how to fix it.

Cloud Adds Layers of Complexity, while SDN Holds Promise to Heal and Automate

We have made a lot of progress in the last 15 years. Cloud computing has come into its own. Public clouds created a new area within IT that, in some cases, IT was not going to own. These days the cloud simply adds another layer of complexity because now you have to monitor those resources that you may or may not own, or have full visibility into them.

Software-defined data centers, software-defined storage and software-defined networking (SDN) is where we are today, and where we are headed in the future. SDN promises automatic healing and automation. Fortunately, SDN was built with robust network management capabilities built into it, unlike the technology we were working with years ago. I should note that SDN means something to major enterprises while SMBs aren't going to get there for a while.

We've come along way over the years, from network defining, to software-defining, and everything else in between.